Not that long ago, I remember Microsoft’s CEO Satya Nadella has been announcing a new version of SQL Server 2016. And all of a sudden fresh Community Technology Preview versions started appearing one by one (currently the latest version is CTP3.1). Having tested the new version, I would like to share my impressions.

I will now review the new T-SQL syntax features of SQL Server 2016: JSON, GZIP, DROP IF EXISTS, TRUNCATE TABLE by partitions…

# 1 – DROP IF EXISTS

CREATE TABLE dbo.tbl (

a INT, b INT,

CONSTRAINT ck CHECK (a > 1),

INDEX ix CLUSTERED (a)

)

Earlier, I had to check before deleting an object:

IF OBJECT_ID(N'dbo.tbl', 'U') IS NOT NULL

DROP TABLE dbo.tbl

But now, there is a shorter syntax:

DROP TABLE IF EXISTS dbo.tbl

In the easy way, you can delete child objects:

ALTER TABLE dbo.tbl DROP COLUMN IF EXISTS b ALTER TABLE dbo.tbl DROP CONSTRAINT IF EXISTS ck ALTER TABLE dbo.tbl DROP CONSTRAINT IF EXISTS ix

DROP statements can be combined for one parent object:

ALTER TABLE dbo.tbl DROP

COLUMN IF EXISTS b,

CONSTRAINT IF EXISTS ck,

CONSTRAINT IF EXISTS ix

DROP IF EXISTS is supported for almost all user objects (the complete list can be found here):

DROP TABLE IF EXISTS #temp DROP TABLE IF EXISTS ##temp DROP VIEW IF EXISTS dbo.view1 DROP PROCEDURE IF EXISTS dbo.proc1 DROP DATABASE IF EXISTS db

# 2 – SESSION_CONTEXT

In my practice, there were tasks on sharing parameters within a user session. Previously, I had to use CONTEXT_INFO, which was limited in size to 128 bytes:

DECLARE

@UserID SMALLINT = 1

, @LocaleID INT = 123

DECLARE @ctn VARBINARY(128)

SET @ctn = CAST(@UserID AS BINARY(2)) + CAST(@LocaleID AS BINARY(4))

SET CONTEXT_INFO @ctn

Now, everything has become a bit more comfortable with the new SESSION_CONTEXT function, which allows to store 256KB per session:

EXEC sys.sp_set_session_context @key = N'UserID', @value = 1

EXEC sys.sp_set_session_context @key = N'LocaleID', @value = 123

SELECT

UserID = SESSION_CONTEXT(N'UserID')

, LocaleID = SESSION_CONTEXT(N'LocaleID')

# 3 – CHECKDB + MAXDOP

By default, upon execution of DBCC CHECKDB command, the number of threads equal to the number of logical cores was used. Now, you can limit the number of threads so as not to reduce server performance on the whole:

DBCC CHECKDB(N'AdventureWorks2016CTP3') WITH MAXDOP = 4

Similar functionality is added to DBCC CHECKTABLE and DBCC CHECKFILEGROUP:

USE AdventureWorks2016CTP3

GO

DBCC CHECKTABLE('HumanResources.Employee') WITH MAXDOP = 4

DBCC CHECKFILEGROUP(1) WITH MAXDOP = 4

DBCC CHECKDB was slightly optimized in terms of checking filtered indexes and the PERSISTED COMPUTED columns. Additionally, the time for checking tables containing a large number of partitions was reduced.

# 4 – FORMATMESSAGE

In the previous versions, the FORMATMESSAGE function could use only previously added custom messages:

EXEC sys.sp_addmessage

@msgnum = 66667,

@severity = 16,

@msgtext = N'param1: %s, param2: %s'

DECLARE @msg NVARCHAR(2048) = FORMATMESSAGE(66667, N'one', N'two')

SELECT @msg

It is now possible to specify a random mask:

SELECT FORMATMESSAGE('val1: %+i, val2: %+d', 5, -6)

Many routine operations (like quoting or string concatenation) can be done more nicely:

SELECT FORMATMESSAGE('SELECT * FROM [%s].[%s]', SCHEMA_NAME([schema_id]), name)

FROM sys.objects

WHERE [type] = 'U'

# 5 – COMPRESS & DECOMPRESS

The integrated support for GZIP: COMPRESS and DECOMPRESS appeared in the new edition. In decoding, it is important to check the correct datatype to which the output is converted:

DECLARE @a VARBINARY(MAX) = COMPRESS('test test test')

SELECT

@a

, DECOMPRESS(@a)

, CAST(DECOMPRESS(@a) AS NVARCHAR(MAX)) -- issue

, CAST(DECOMPRESS(@a) AS VARCHAR(MAX))

Here, I encode the ANSI string and try to decode the received value:

----------------------------------------------------------- -------------------------------- ---------------- ----------------- 0x1F8B08000000000004002B492D2E5128811100026A5B230E000000 0x7465737420746573742074657374 整瑳琠獥⁴整瑳 test test test

# 6 – DATEDIFF_BIG

In SQL Server 2008, new parameters, MICROSECOND and NANOSECOND, were added to the DATEDIFF function, but when an excessively large date range was specified:

SELECT DATEDIFF(NANOSECOND, '20000101', '20160101')

this could lead to an error:

Msg 535, Level 16, State 0, Line 1 The datediff function resulted in an overflow. The number of dateparts separating two date/time instances is too large. Try to use datediff with a less precise datepart.

The new DATEDIFF_BIG feature was added for such situations:

SELECT DATEDIFF_BIG(NANOSECOND, '20000101', '20160101')

# 7 – AT TIME ZONE

In CTP3.0, a new system presentation appeared:

SELECT name, current_utc_offset, is_currently_dst FROM sys.time_zone_info

where you can get a list of time zones:

name current_utc_offset is_currently_dst -------------------------- ------------------ ---------------- Dateline Standard Time -12:00 0 UTC-11 -11:00 0 ... Central Standard Time -06:00 0 ... Pacific SA Standard Time -03:00 0 UTC-02 -02:00 0 ... UTC +00:00 0 GMT Standard Time +00:00 0 Greenwich Standard Time +00:00 0 ... Belarus Standard Time +03:00 0 Russian Standard Time +03:00 0 ...

Using AT TIME ZONE, you can display the time in a given time zone:

SELECT CONVERT(DATETIME2, GETDATE()) AT TIME ZONE N'Belarus Standard Time'

---------------------------------- 2015-12-02 14:51:02.1366667 +03:00

which can be parameterized:

DECLARE @tz NVARCHAR(256) = N'Belarus Standard Time'

SELECT

GETDATE() AT TIME ZONE @tz

, CONVERT(DATETIME2, GETDATE()) AT TIME ZONE @tz

---------------------------------- 2015-12-02 14:51:28.6266667 +03:00

What are the benefits of this feature? For example, you can display the time in other time zones based on the local time zone:

SELECT name, CONVERT(DATETIME,

SWITCHOFFSET(SYSUTCDATETIME() AT TIME ZONE name,

DATENAME(TzOffset, SYSDATETIMEOFFSET()))

)

FROM sys.time_zone_info

---------------------------------- ----------------------- Dateline Standard Time 2015-12-03 02:56:41.940 UTC-11 2015-12-03 01:56:41.940 ... Pacific SA Standard Time 2015-12-02 17:56:41.940 UTC-02 2015-12-02 16:56:41.940 ... UTC 2015-12-02 14:56:41.940 GMT Standard Time 2015-12-02 14:56:41.940 Greenwich Standard Time 2015-12-02 14:56:41.940 Central European Standard Time 2015-12-02 13:56:41.940 ...

# 8 – JSON

Support for JSON is one of the major features of SQL Server 2016. Starting from CTP2.0, you can generate JSON, by analogy with XML. The two constructions FOR JSON AUTO and FOR JSON PATH are supported:

SELECT TOP (2) name, database_id, source_database_id, create_date

FROM sys.databases

FOR JSON AUTO, ROOT('root')

{"root":

[

{"name":"master","database_id":1,"create_date":"2003-04-08T09:13:36.390"},

{"name":"tempdb","database_id":2,"create_date":"2015-12-02T11:34:36.080"}

]

}

SELECT TOP (2)

name

, [db.id] = database_id

, [db.scr_id] = source_database_id

, [db.date] = create_date

FROM sys.databases

FOR JSON PATH, ROOT

{"root":

[

{

"name":"master",

"db":{"id":1,"date":"2003-04-08T09:13:36.390"}

},

{

"name":"tempdb",

"db":{"id":2,"date":"2015-12-02T11:34:36.080"}

}

]

}

For the NULL values to be included in JSON upon generation, use INCLUDE_NULL_VALUES option:

SELECT TOP (1) name, database_id, source_database_id FROM sys.databases FOR JSON AUTO, INCLUDE_NULL_VALUES

[

{

"name":"master",

"database_id":1,

"source_database_id":null

}

]

NVARCHAR should be used for storing JSON, since no particular data type is provided:

DECLARE @json NVARCHAR(MAX) = (

SELECT key1 = 1, key2 = GETDATE()

FOR JSON PATH

)

SELECT @json

{"key1":1,"key2":"2015-12-02T15:45:05.530"}

You can use OPENJSON to make a selection from JSON. If there is only one record, then the result will be a «key-value»:

DECLARE @json NVARCHAR(MAX) = N'

{

"UserID" : 1,

"UserName": "JC Denton",

"IsActive": true,

"RegDate": "2015-12-02"

}';

SELECT * FROM OPENJSON(@json)

key value type ----------- ------------ ---- UserID 1 2 UserName JC Denton 1 IsActive true 3 RegDate 2015-12-02 1

This behavior can be used as “one more variant” of the string split:

DECLARE @a NVARCHAR(100) = '1,2,3' SELECT CAST(value AS INT) FROM OPENJSON(N'[' + @a + N']')

----------- 1 2 3

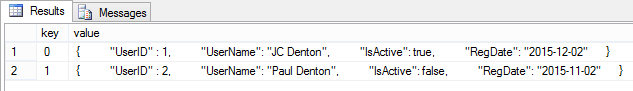

In case of several records:

DECLARE @json NVARCHAR(MAX) = N'

[

{

"UserID" : 1,

"UserName": "JC Denton",

"IsActive": true,

"RegDate": "2015-12-02"

},

{

"UserID" : 2,

"UserName": "Paul Denton",

"IsActive": false,

"RegDate": "2015-11-02"

}

]';

SELECT * FROM OPENJSON(@json)

the result is as follows:

Parse data from JSON is quite simple:

DECLARE @json NVARCHAR(MAX) = N'

[

{

"UserID" : 1,

"UserName": "JC Denton",

"IsActive": true,

"RegDate": "2015-12-02"

},

{

"UserID" : 2,

"UserName": "Paul Denton",

"IsActive": 0,

"RegDate": "2015-11-02"

}

]';

SELECT *

FROM OPENJSON(@json)

WITH

(

UserID INT,

UserName VARCHAR(50),

IsActive BIT,

[Date] DATE '$.RegDate'

)

UserID UserName IsActive Date ----------- --------------- -------- ---------- 1 JC Denton 1 2015-12-02 2 Paul Denton 0 2015-11-02

Use JSON_VALUE if you want to get a scalar expression:

DECLARE @json NVARCHAR(4000) = N'

{

"UserID" : 1,

"Detail": [

{ "Year":2016 },

{ "Year":2015, "Options": [{ "Visible":true }]

]

}'

SELECT

JSON_VALUE(@json, '$.UserID')

, JSON_VALUE(@json, '$.Detail[0].Year')

, JSON_VALUE(@json, '$.Detail[1].Year')

, JSON_VALUE(@json, '$.Detail[1].Options[0].Visible')

You can use ISJSON, if you want to make sure that the text is JSON:

DECLARE @json NVARCHAR(MAX) = N'{"ID" : 1}';

SELECT ISJSON(@json), ISJSON('')

For extracts an object or an array from a JSON string use JSON_QUERY:

DECLARE @json NVARCHAR(4000) = N'

{

"Main" :{

"Detail": {

"Name":"color",

"Value":"blue"

}

}'

SELECT JSON_QUERY(@json, '$.Main.Detail')

Also need keep in mind, that no specific type of indexes are provided for JSON, but there is a possibility to use COMPUTED columns:

DROP TABLE IF EXISTS dbo.Users

CREATE TABLE dbo.Users (

OrderID INT PRIMARY KEY,

JSON NVARCHAR(4000),

CONSTRAINT CK_IsJSON CHECK (ISJSON(JSON)=1),

Age AS (CONVERT(INT, JSON_VALUE(JSON, '$.Age')))

)

CREATE INDEX IX_Age ON dbo.Users(Age)

Now, an index can be created not for all JSON-based COMPUTED columns:

ALTER TABLE dbo.Users

ADD RegDate AS (CAST(JSON_VALUE(JSON, '$.Age') AS DATE))

GO

CREATE INDEX IX_RegDate ON dbo.Users(RegDate)

Msg 2729, Level 16, State 1, Line 15 Column 'RegDate' in table 'dbo.Users' cannot be used in an index or statistics or as a partition key because it is non-deterministic.

This bug should be fixed in the next version of CTP.

# 9 – ONLINE ALTER COLUMN

The ALTER COLUMN command can now be executed in the ONLINE mode. When you execute ALTER COLUMN, the column data will be available for reading, and blocking the Sch-M schema is applied only at the end of the ALTER operation, when switching to new pages with data (more details can be found here)

DROP TABLE IF EXISTS dbo.tbl

CREATE TABLE dbo.tbl (x VARCHAR(255) NULL)

GO

ALTER TABLE dbo.tbl

ALTER COLUMN x VARCHAR(255) NOT NULL

WITH (ONLINE = ON)

GO

ALTER TABLE dbo.tbl

ALTER COLUMN x NVARCHAR(255)

COLLATE Cyrillic_General_100_CI_AS NOT NULL

WITH (ONLINE = ON)

# 10 – TRUNCATE TABLE + PARTITIONS

In CTP2.0, the ability to work with separate partitions, not only with the entire table, was added for the TRUNCATE TABLE operation. You can specify not only a separate partition, but the whole range. Let’s create a table with partitions:

CREATE PARTITION FUNCTION PF (SMALLINT) AS RANGE RIGHT FOR VALUES (1, 2, 3, 4, 5)

GO

CREATE PARTITION SCHEME PS AS PARTITION PF ALL TO ([PRIMARY])

GO

DROP TABLE IF EXISTS dbo.tbl

CREATE TABLE dbo.tbl (a SMALLINT PRIMARY KEY) ON PS (a)

GO

INSERT INTO dbo.tbl (a)

VALUES (0), (1), (2), (3), (4), (5)

SELECT partition_number, [rows]

FROM sys.partitions

WHERE [object_id] = OBJECT_ID('dbo.tbl')

AND index_id < 2

partition_number rows ---------------- ------ 1 1 2 1 3 1 4 1 5 1 6 1

TRUNCATE TABLE dbo.tbl WITH (PARTITIONS (1, 4 TO 5))

partition_number rows ---------------- ------ 1 0 2 1 3 1 4 0 5 0 6 1

A small postscript…

From what I see now, the new version of SQL Server 2016 promises to be very interesting. Each new CTP adds a large number of features, which are difficult to describe in one article. To preserve readability, I left behind Temporal Tables, Dynamic Data Masking and improvements in In-Memory, which I plan to discuss soon.

Great article! I am working on an executive overview that I need to present to my manager, can anybody point me to some other high-level discussions on this topic, I’m not a great writer (except when it comes to code :)) and I would like to see how some people describe it in a way that’s easy for non-technical folks … Thanks