CSV files provide a great way to collect and store data, and they are often used as a reliable way to move table data between servers. In this article, we’ll look at two ways of importing and exporting PostgreSQL tables from and to CSV files – using the built-in import and export functionality of dbForge Studio for PostgreSQL and using the COPY command.

Creating a CSV file and preparing the table structure

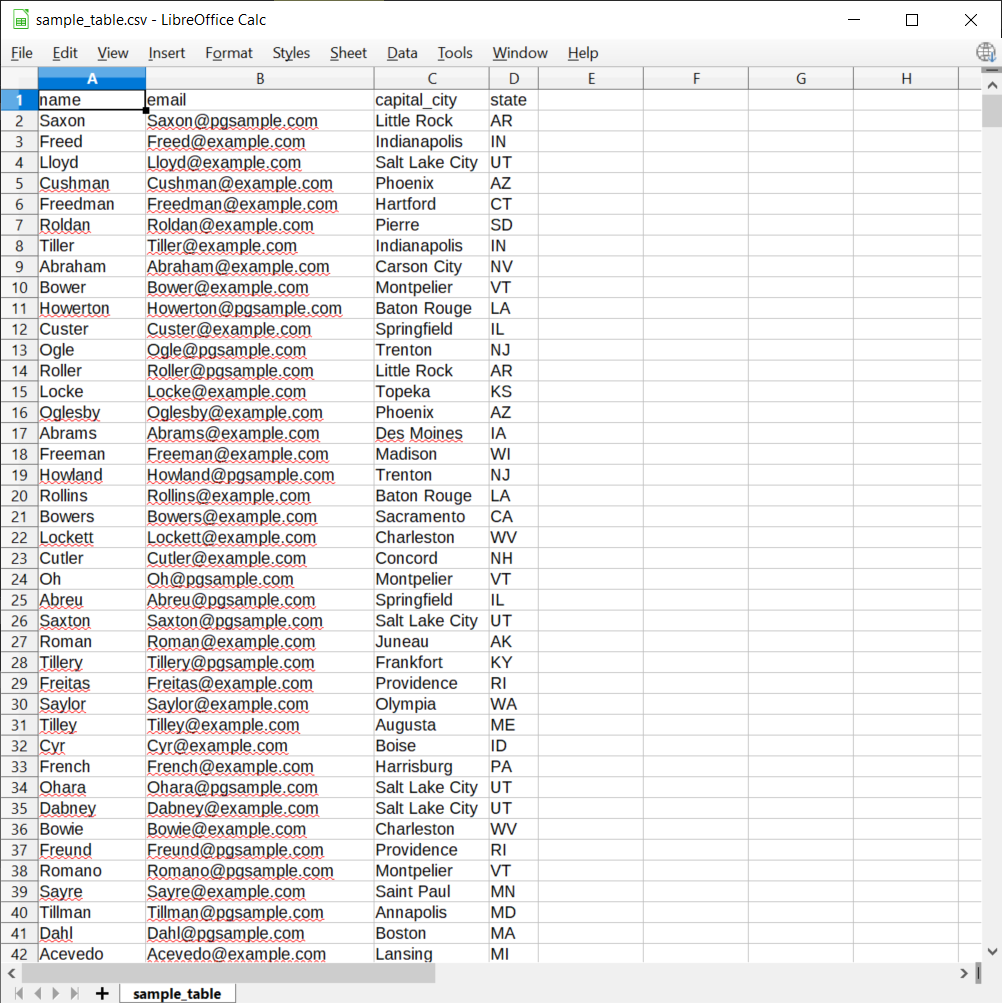

First of all, we’ll need a CSV file with data and a basic table

structure that will be able to house this data.

You can

use any CSV file on your computer for this example. If you have no

CSV files to use, there are two ways you can create such a file:

- Creating it in a specialized application (e.g., Microsoft Excel)

- Using any text editor to create a new file, pasting some comma-delimited text data into it, and saving it as a CSV file (for example, table_data_import.csv).

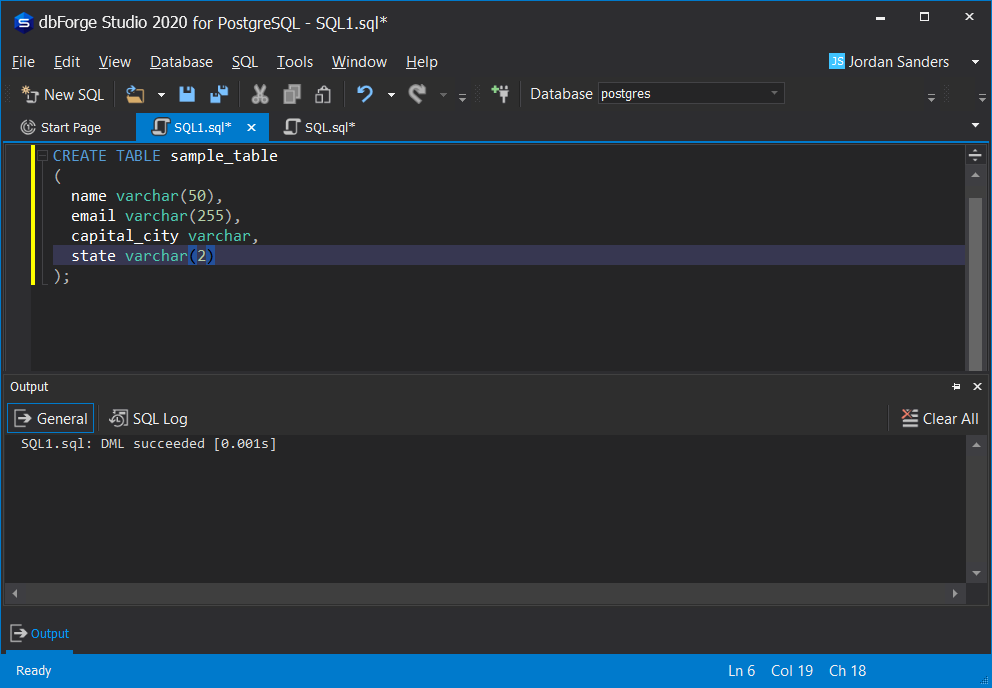

After that, we need to create an empty table in your database. This table’s columns should represent how the data from the CSV file will be interpreted. For example:

CREATE TABLE sample_table

(

name varchar(50),

email varchar(255),

capital_city varchar,

state varchar(2)

);

Once we have both the CSV file and the table structure, we can actually test the import and export processes for PostgreSQL table data.

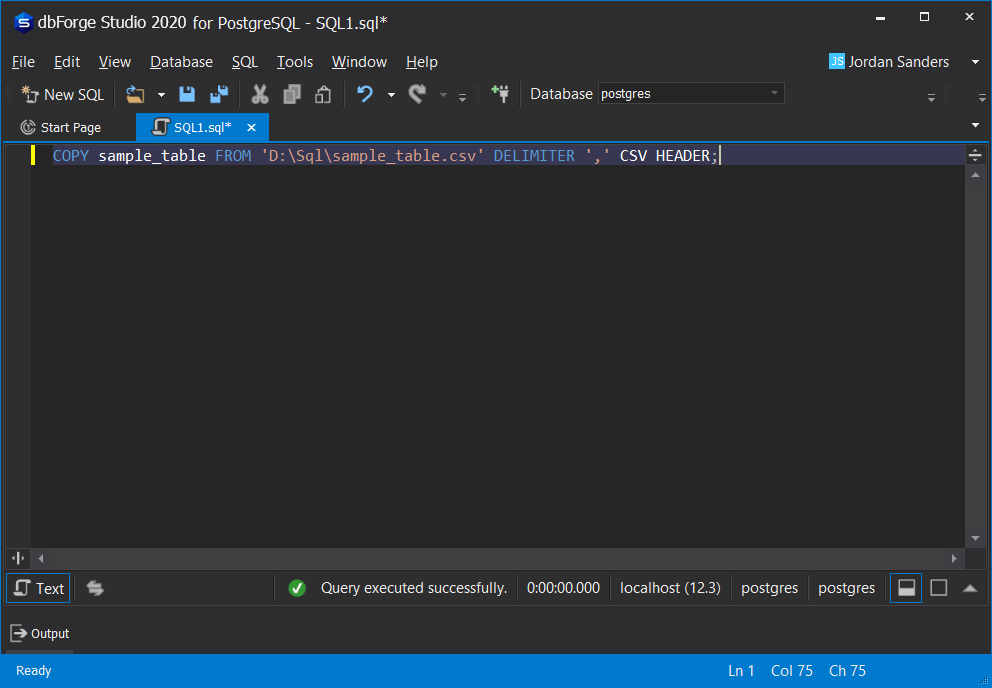

Importing a CSV file using a COPY statement

One way of importing a CSV file into a table is using the COPY statement. This command copies all records from a specified file into a target database table. Here’s the basic syntax of the COPY command:

COPY FROM 'location + file_name' DELIMITER ',' CSV HEADER;

<table name> – the name of the table you want to import data into.

‘location + file_name’ – the full path to the file you’re importing data from.

DELIMITER ‘,’ – specifies the delimiter we use as the comma symbol.

CSV – specifies the format of a file we’re importing data from.

HEADER – specifies that the target file contains a header row that should be skipped during the import process.

In our example, the statement will look like this:

COPY sample_table FROM 'D:\csv_examples\table_data_import.csv' DELIMITER ',' CSV HEADER;

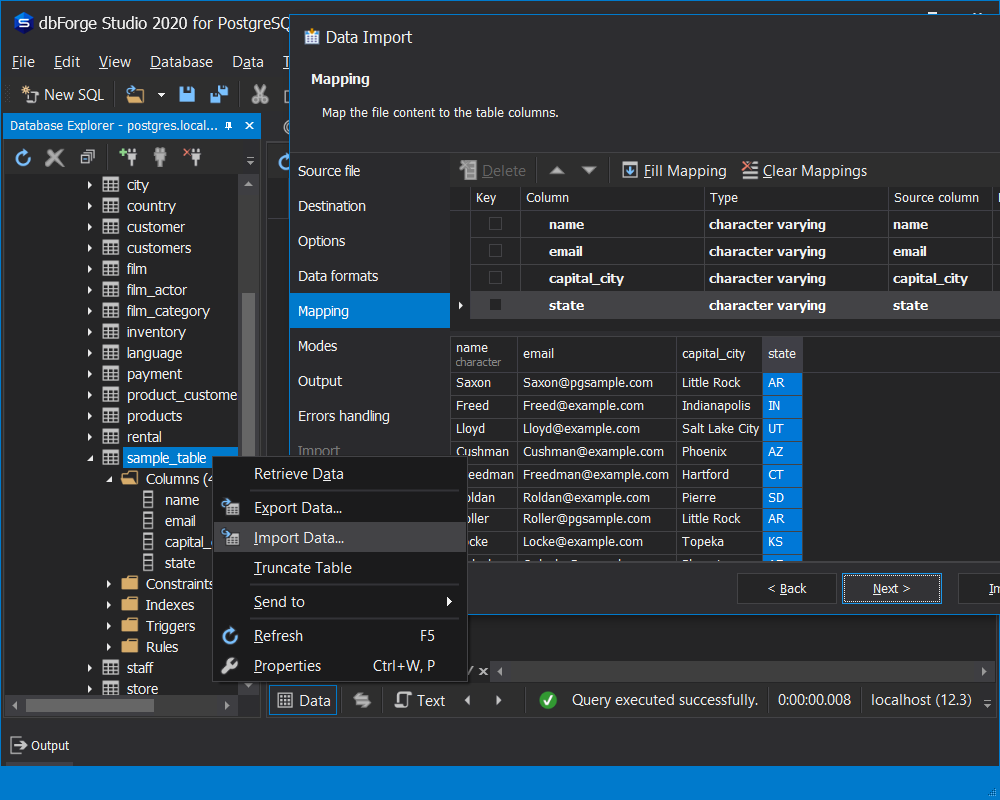

Importing a CSV file in dbForge Studio for PostgreSQL

Alternatively, you can import a CSV file into a table directly from dbForge Studio for PostgreSQL.

To do this, go to Database → Import Data…

The

Data Import wizard will be opened. Here, choose CSV and

specify the path to the file in the File Name

section located at the bottom of the window.

From here, the Data Import Wizard will guide you through configuring the settings of the import process.

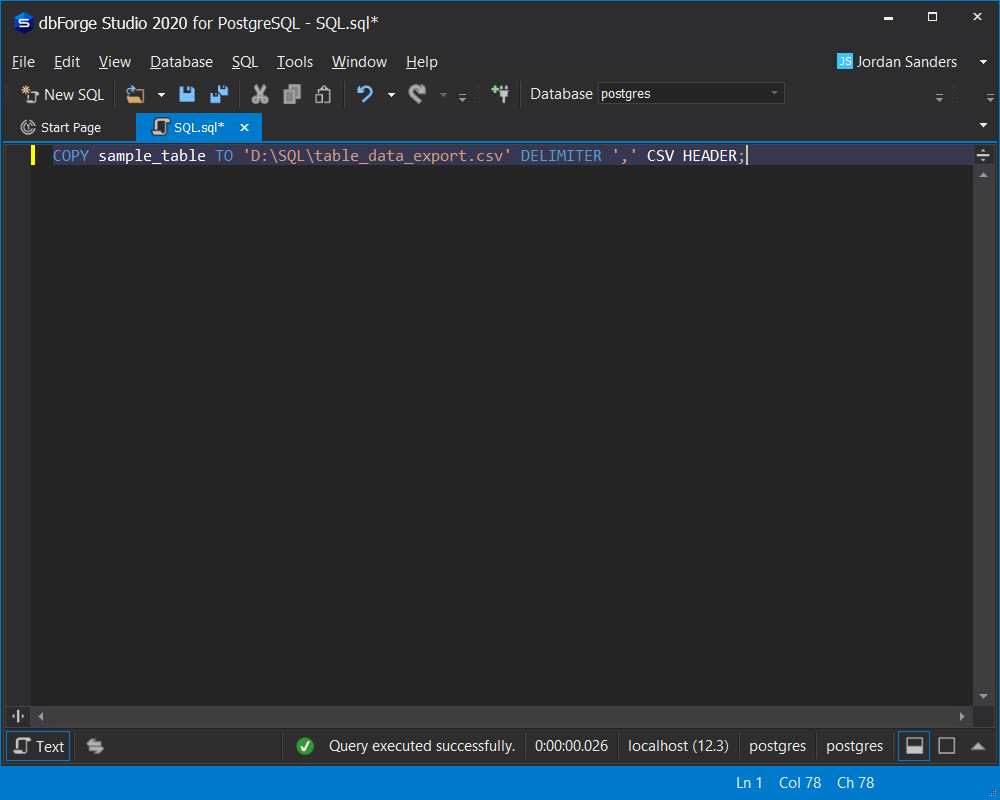

Exporting a CSV file using a COPY statement

The process of exporting PostgreSQL data into a CSV file is very similar to what we’ve been doing for importing. The only difference here is that you need to change the FROM keyword in the COPY command to the TO keyword. By doing this, you will make the command export the data from the target table into a specified CSV file. Here’s what the command will look like in this case:

COPY sample_table TO 'D:\csv_examples\table_data_export.csv' DELIMITER ',' CSV HEADER;

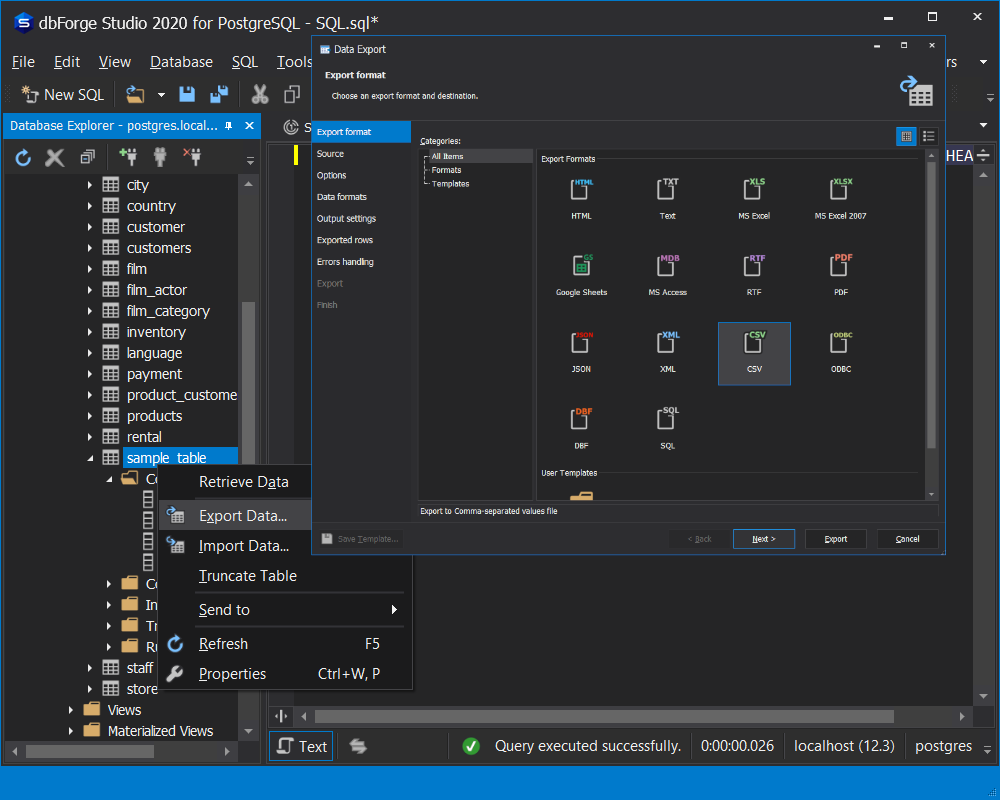

Exporting a CSV file in dbForge Studio for PostgreSQL

You can also export data from a table into a CSV file directly from dbForge Studio for PostgreSQL.

First, you would simply need to navigate to Database → Export Data…

This will prompt the Data Export wizard to open, and from that point, it will provide you a wide range of settings for the export process.

See also: How to duplicate a table in PostgreSQL

Conclusion

In this article, we looked at two ways of importing and exporting PostgreSQL tables from and to CSV files. To get a better insight into the question, you can learn more about the data import and export functionality of dbForge Studio for PostgreSQL. You can download dbForge Studio for PostgreSQL and check its full capabilities in a free 30-day trial version. If you wish to automate this process, feel free to check out the latest tutorial by Skyvia cloud platform.