Transaction logs play a crucial role in database management, especially in SQL Server databases. These logs meticulously record every transaction and the corresponding changes made to the database. Such detailed tracking is indispensable for identifying issues and restoring the database to a specific state and time if any failures occur.

In SQL Server, every database includes a transaction log, a feature that is always enabled and cannot be turned off. This article will explore various methods to view and analyze transaction logs, focusing on SQL Server’s native capabilities and third-party graphical user interface (GUI) solutions.

Contents:

- Understanding SQL Server transaction logs

- Method 1: Using native SQL Server functions

- Method 2: Using the dbForge Transaction Log reader tool

- Additional techniques and considerations: transaction log backups

- Conclusion

- Frequently Asked Questions (FAQ)

Why you need a SQL transaction log viewer

SQL Server’s transaction log is the backbone of data integrity, recovery, and auditability. But without a way to view and analyze it, this critical resource remains opaque. A transaction log viewer turns its raw, low-level data into strategic visibility. Here’s why it matters.

1. Incident response and forensic analysis

When systems fail or data is compromised, log files offer the only definitive account of what happened. A transaction log viewer allows you to trace every operation, down to the individual row-level change, with time stamps, transaction IDs, and user context. Whether you’re investigating a breach, internal misuse, or accidental data loss, it’s the fastest path to clarity.

2. Undoing risky or unauthorized changes

Not all mistakes require a full database restore. With a viewer, you can isolate specific transactions, such as a mass delete or an unintended update, and generate precise rollback scripts. This reduces downtime, avoids broader data loss, and lets you surgically undo changes without affecting healthy data.

3. Internal and regulatory audits

Financial services, healthcare, and other regulated industries are subject to stringent audit and reporting requirements. While SQL Server logs all changes, the data is virtually unreadable without tooling. A log viewer exposes exactly who did what and when—critical for proving compliance with data retention policies, access controls, and change management standards.

4. Debugging transaction-level anomalies

When applications misbehave, traditional logging doesn’t always tell the whole story. Transaction logs reveal internal behavior that may not be apparent elsewhere, such as deadlocks, long-held locks, rolled-back transactions, or write conflicts. With proper visibility, developers and DBAs can debug issues that would otherwise go unresolved.

5. Operational intelligence and optimization

Beyond problem-solving, the transaction log offers insights into how systems operate under load. With a viewer, you can monitor usage patterns, identify frequently updated tables, detect excessive logging, and assess how indexing strategies affect write activity. These insights inform capacity planning and performance tuning.

How SQL Server stores transaction logs

SQL Server’s transaction log is more than a passive record, it’s the foundation for recovery, concurrency, and data durability. Every time a transaction occurs, whether it’s a single-row update or a complex batch operation—SQL Server writes the details to the transaction log before committing the disk change. This write-ahead logging model guarantees that the system can recover to a consistent state after any failure.

What the transaction log captures

The transaction log captures a detailed, ordered history of every data modification event in the database, including:

- Start and end markers for each transaction

- INSERT, UPDATE, and DELETE operations

- Table or index creation and deletion

- Page allocations and deallocations

- Table truncations

- Lock acquisition details

Each change is recorded with a Log Sequence Number (LSN), which acts as a timestamp and helps SQL Server determine which transactions to roll forward or roll back during recovery.

How the log is structured

The transaction log is stored in .ldf files, which may be one or many per database. Internally, SQL Server breaks the log into smaller units called Virtual Log Files (VLFs). These segments enable SQL Server to reuse space efficiently; however, mismanagement, such as uncontrolled growth or excessive VLFs, can lead to performance degradation and prolonged recovery times.

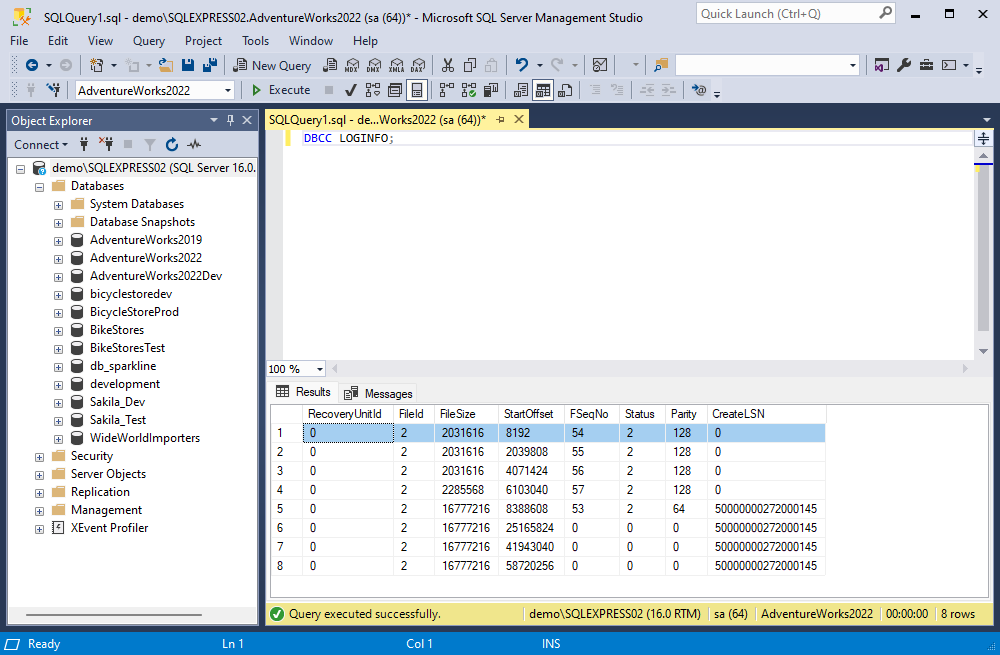

To inspect the current VLF layout, you can use:

DBCC LOGINFOThis command returns a table listing each VLF’s size, status, and sequence, which can help identify fragmentation or excessive growth.

Why Transaction Logs and Their Management Matter in SQL Server

Transaction logs are essential for maintaining data consistency and ensuring stable database performance. They record every change made to the database—but SQL Server doesn’t offer a dedicated interface to view or analyze this information. Accessing it requires using specific methods or third-party tools.

At the same time, transaction logs grow as operations run. Without proper management, they can quickly consume disk space and slow down performance. SQL Server controls this growth through the FILEGROWTH setting, but it’s up to the DBA to define appropriate growth patterns and ensure regular log truncation.

Truncation doesn’t shrink the physical file; it simply frees up space by marking committed transactions as reusable. In simple recovery mode, this happens automatically. In full or bulk-logged recovery modes, it only occurs after a log backup—making your backup strategy essential not just for data protection, but also for controlling log size.

Now that we understand why transaction logs matter and why their size must be managed, let’s explore how to actually access and review this data using built-in SQL Server functions.

Method 1: Using native SQL Server functions

While SQL Server maintains detailed transaction logs, it doesn’t provide an out-of-the-box visual interface to rWhile SQL Server maintains detailed transaction logs, it doesn’t provide an out-of-the-box visual interface to read them. Instead, it offers undocumented functions that expose this data, powerful for advanced users, but not without limitations or risks. These native methods are often utilized by experienced database administrators (DBAs) in emergency recovery, auditing, or forensic workflows.

Here are the primary options:

Using fn_dblog() function

The fn_dblog() function lets you query the active portion of the transaction log—the area still in use by SQL Server. It is beneficial for analyzing uncommitted transactions or recent data changes that have not yet been backed up.

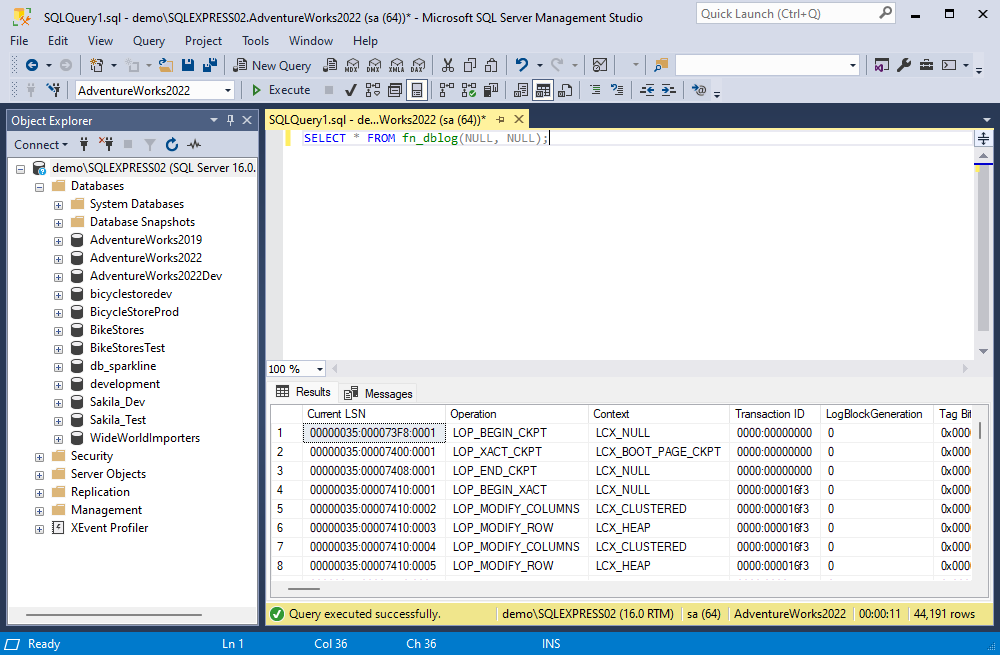

To utilize fn_dblog() for viewing transaction logs, open SQL Server Management Studio (SSMS) and connect to the necessary database. Then enter the below command.

SELECT * FROM fn_dblog(NULL, NULL); The output will display all records in the transaction log, sorted by transaction sequence number. Note that we use (NULL, NULL) as parameters to view all active and online logs.

However, this command retrieves 129 columns which may contain unnecessary data, requiring additional time and effort to sort through. Therefore, it’s often more beneficial to narrow down the query’s scope.

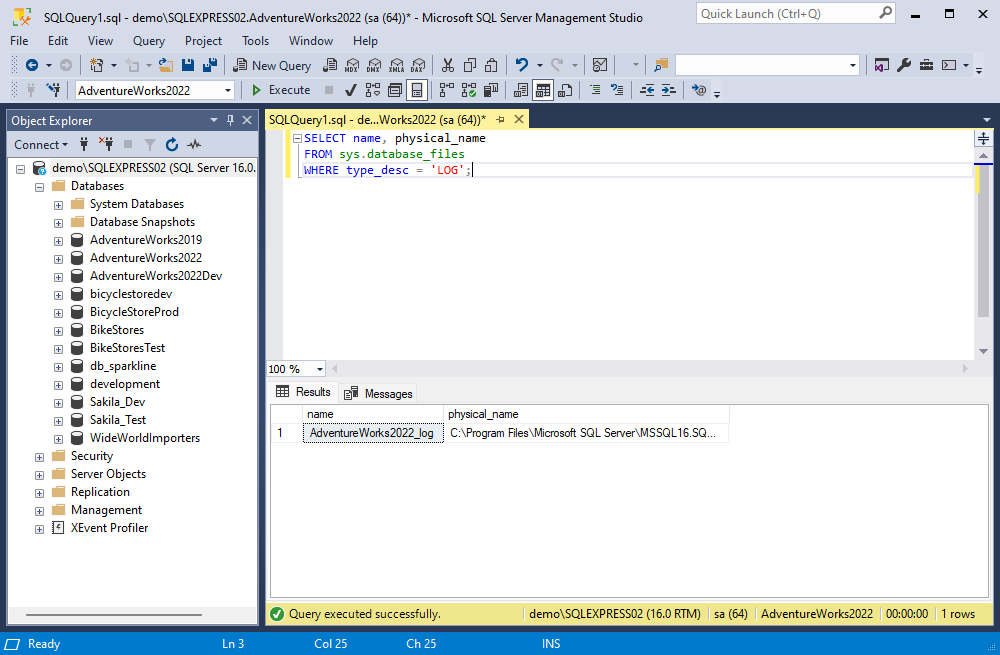

The following command syntax retrieves the list of transaction log files related to a specific database:

SELECT name, physical_name

FROM sys.database_files

WHERE type_desc = 'LOG';

Furthermore, you can apply additional parameters to the fn_dblog() function. With their help, you might specify the start and end times for transactions, and transaction types, limit the query to transactions associated with a particular table, etc.

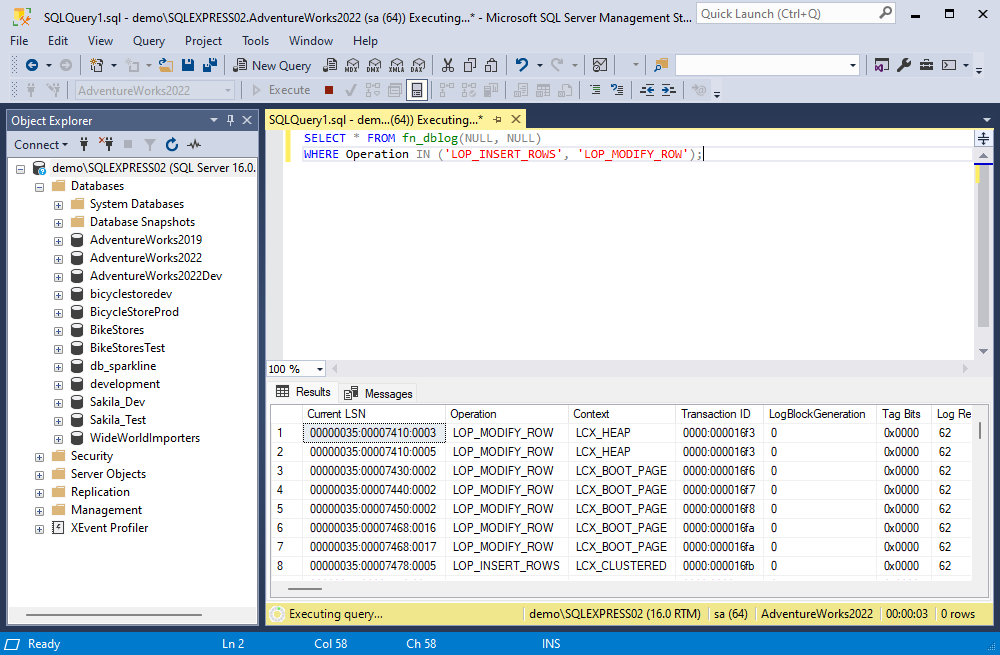

SELECT * FROM fn_dblog(NULL, NULL)

WHERE Operation IN ('LOP_INSERT_ROWS', 'LOP_MODIFY_ROW');

This command provides information about transactions that inserted or modified rows.

Note: fn_dblog() is undocumented. Microsoft does not support it and may return inconsistent results across different versions. It only works on active logs—once a transaction is backed up and truncated, this method can no longer access it.

Using fn_dump_dblog() function

The fn_dump_dblog() function provides access to both online and backup transaction logs, addressing a limitation of fn_dblog(), which only works with active transaction logs.

The syntax of the query utilizing fn_dump_dblog() can be complicated, as it includes 63 parameters, all of which must be specified, but you can simplify the process by using “DEFAULT” for all parameters. Also, make sure to replace path_to_the_transaction_log_backup with the actual path to the location where you store transaction log backups.

SELECT [Current LSN], Context, [Transaction Name], Operation, [Transaction ID], Description

FROM fn_dump_dblog(

NULL, NULL, N'DISK', 1, N'path_to_log_backup.bak',

DEFAULT, DEFAULT, ..., DEFAULT

); The query output includes 129 columns, matching fn_dblog. To optimize your results, consider specifying only the columns you require and narrowing the query’s focus.

SELECT [Current LSN], Context, [Transaction Name], Operation, [Transaction ID], Description

FROM fn_dump_dblog(

NULL, NULL, N'DISK', 1, N'path_to_log_backup.bak',

DEFAULT, DEFAULT, ..., DEFAULT

); A notable benefit of utilizing fn_dump_dblog() is that it allows the database administrators to restore your database to a precise moment in time using the WITH STOPBEFOREMARK statement. This way, administrators can correct transaction errors or undo changes that impact database performance.

DBCC PAGE command

To extract information from transaction log files, you can use the DBCC PAGE command that enables the reading of MDF and LDF files while they are online. DBCC PAGE is a diagnostic tool that displays the contents of a specific data or log file page. It is often used for in-depth troubleshooting, including investigating internal structures or corruption.

Before using this command, it’s necessary to activate the trace flag 3604. This step is essential as it allows the results to be displayed in the results window. To enable it, use the command:

DBCC TRACEON (3604, -1)Once this is set up, you can proceed with the DBCC PAGE command. This basic syntax is as follows:

DBCC PAGE ({'Database name' | DB_ID}, File_number, Page_number [, output={0|1|2|3} ])However, this command should be used with caution, and it is not recommended for accessing transaction log data directly. Besides, the output may include various error messages and warnings, and the format of the online LDF file content is not designed for easy interpretation by humans.

Final Thoughts on Native Methods

These native options are powerful but come with caveats. They:

- They are undocumented, meaning their behavior may change between SQL Server versions.

- Expose internal structures not meant for casual use.

- Require significant expertise and care—mistakes can lead to misinterpretation or, worse, unintended side effects if misused.

For day-to-day operations, auditing, or rollback purposes, third-party tools often provide safer and more intuitive alternatives.

Method 2: Using the dbForge Transaction Log reader tool

Many developers of database management software recognize the significance of the data stored in transaction logs and offer specialized tools. Such tools are designed to ensure easy access to these logs and user-friendly viewing of their contents, as well as efficient management of the stored data.

One such tool is dbForge Transaction Log for SQL Server, a dedicated SQL Server log reader and .LDF file viewer. This powerful tool allows users to examine and analyze the information contained within SQL Server transaction logs, also providing data recovery capabilities. Furthermore, dbForge Transaction Log enables users to undo unwanted transactions and recover data directly through the user-friendly graphical interface.

Key capabilities

dbForge Transaction Log offers features that go well beyond what native methods provide. These include:

- Viewing the detailed login information

- Auditing of data changes in tables

- Access to the database change history

- Transaction replay and rollback capabilities

- Recovery of deleted records

- Reversion of selected tables to a specific point in time

- Exporting transactions into SQL files

This tool equips developers and database administrators with the essential functionalities needed for efficient and effective management of transaction logs and their associated data.

Let us now review the usage of dbForge Transaction Log for SQL Server. In this article, we will consider utilizing this tool for the following purposes:

- Rolling back changes

- Replicating changes to another database

To illustrate the work of the dbForge Transaction Log reader, we will use the sample database for a recruiting agency.

Reading SQL transaction log files for rolling back changes

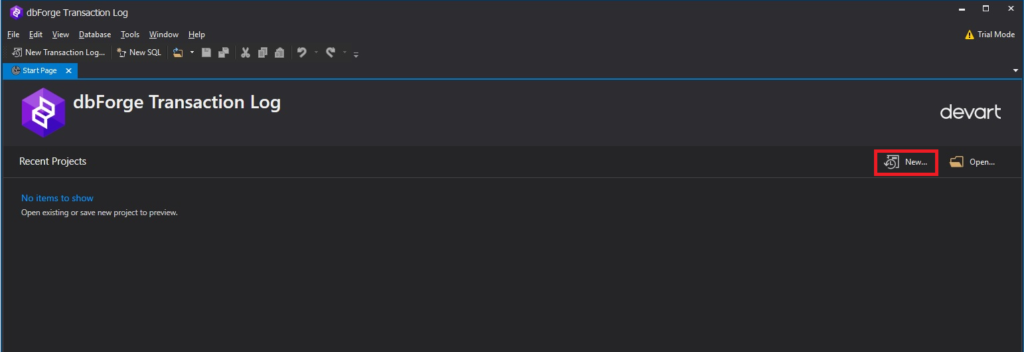

Step 1. Let’s create a new project by clicking the New button.

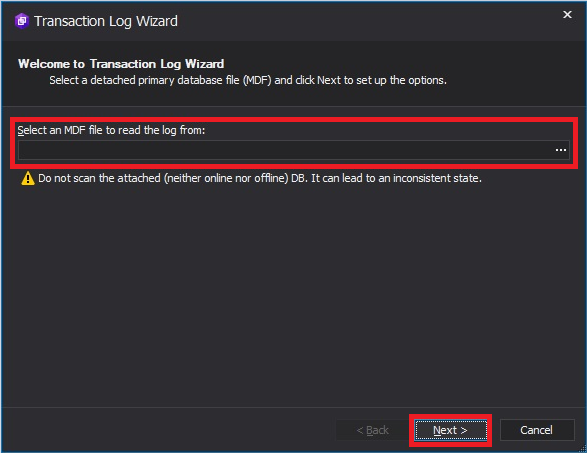

Step 2. We will select the database’s .mdf file, which works together with its .ldf file to provide complete log data. These mdf and ldf files are both required for accurate transaction log analysis and recovery.

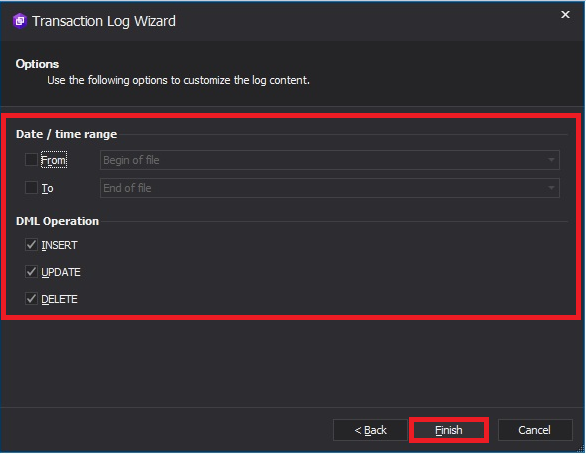

Step 3. Set the time range for the operations and select the types of operations (INSERT, UPDATE, DELETE) to customize the log output. In our case, we are concerned with the entire time range and all types of operations. Having made the necessary configuration settings, click the Finish button.

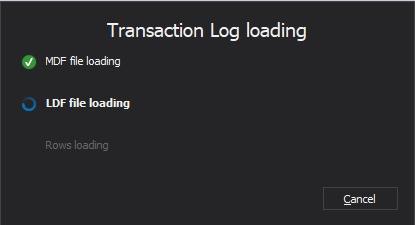

The screen will display the Transaction Log loading window showing the process of collecting information according to the configured options.

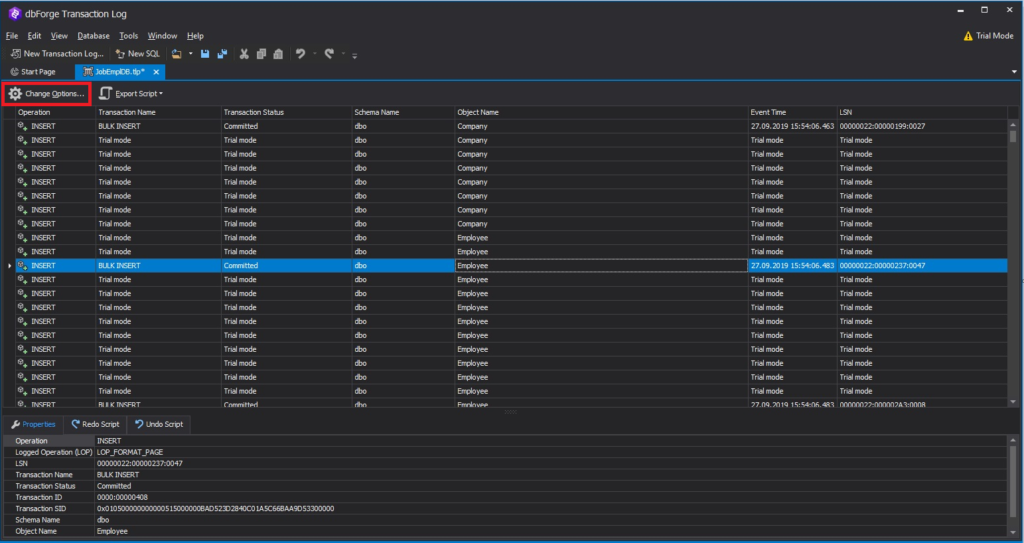

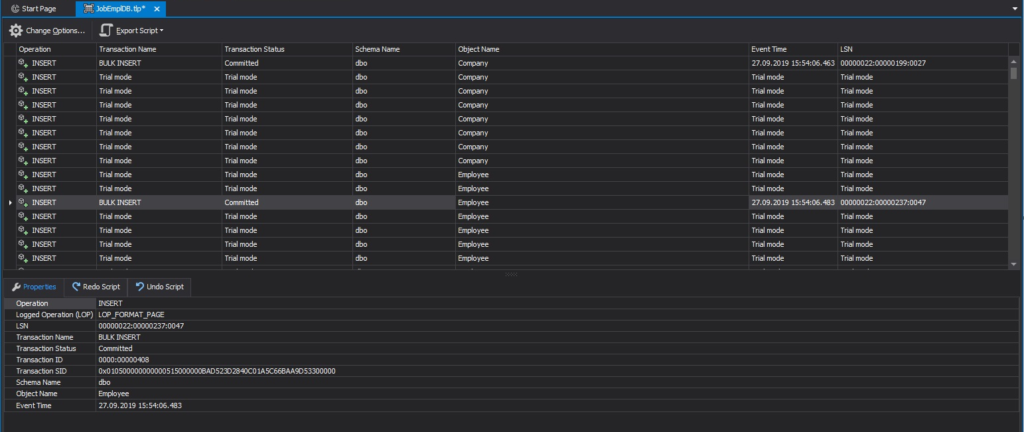

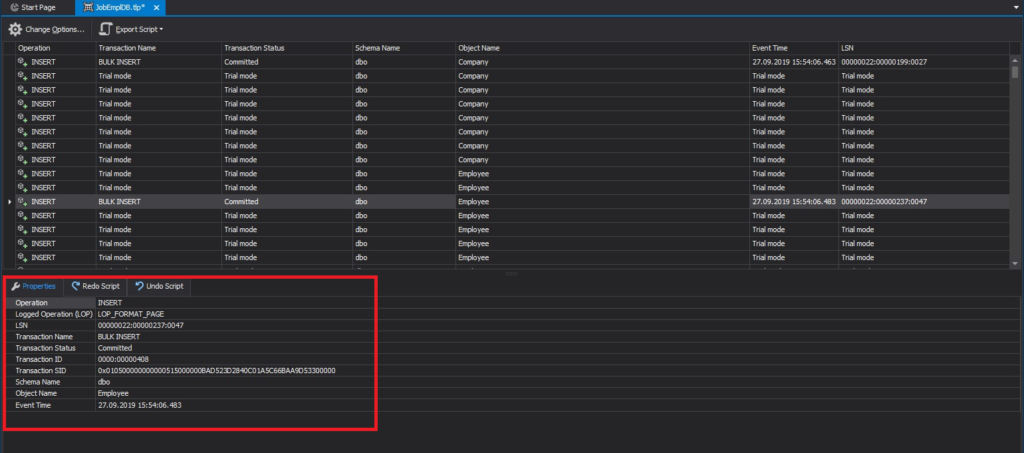

Once the data is processed, the following window displaying the project you’ve created will appear.

Step 4. In the project window, you can change the transaction selection settings by clicking the Change Options button.

Now let’s consider the project window in more detail.

As you can see, it displays a table and the details for each selected row. The details include:

1) Properties that describe the transaction itself.

2) T-SQL transaction script.

3) T-SQL script to undo the transaction.

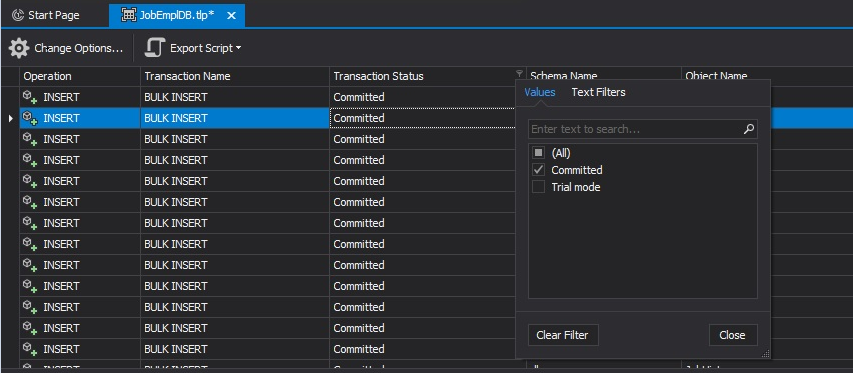

Step 5. Sort out only committed transactions by selecting the Committed option in the filter for the Transaction Status column.

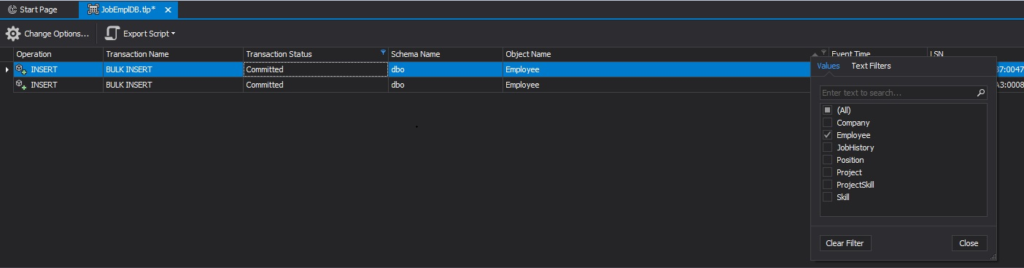

Step 6. In the filter for the Object Name column, we will select Employee, thereby sorting out only those transactions that are associated with the Employee table.

Thus, we get all committed transactions associated with the Employee table.

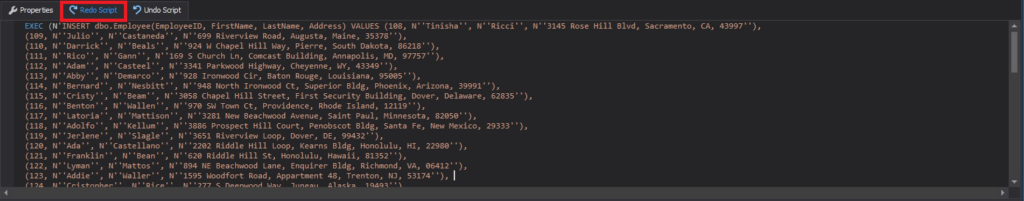

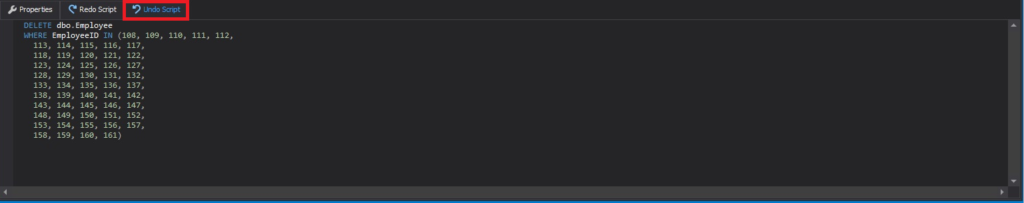

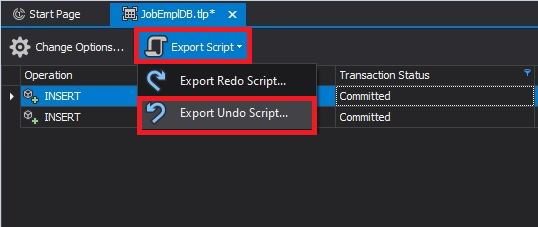

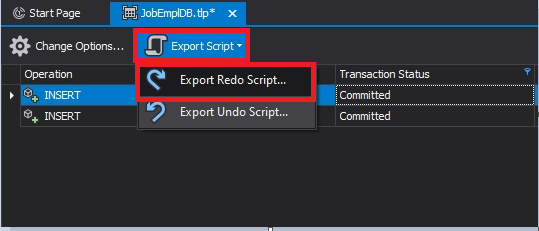

Step 7. Now we can generate a script to roll back all committed transactions associated with the Employee table by clicking the Export Script button and then selecting the Export Undo Script option from the drop-down menu.

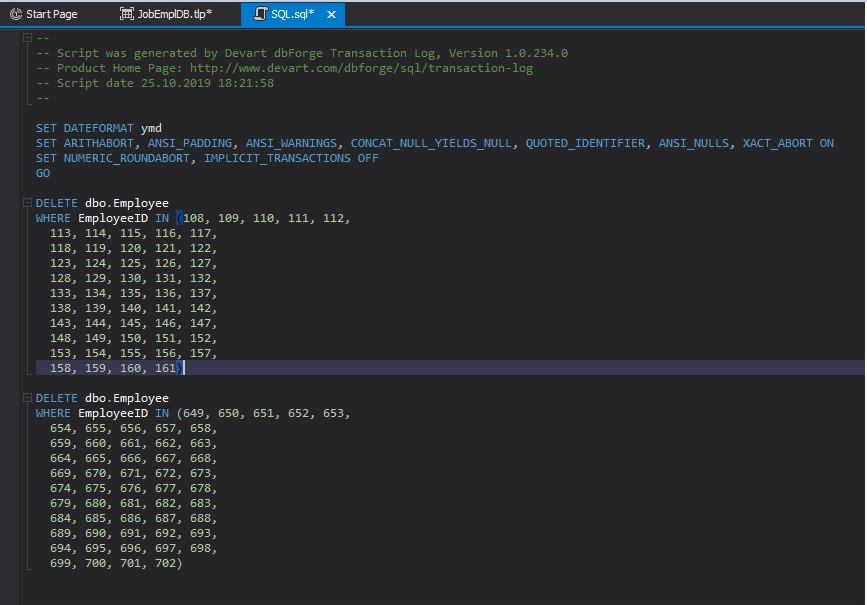

Step 8. The script we need will be displayed. You can use it to roll back the changes to the current database.

Reading SQL transaction log files for replicating changes to another database

Similarly, you can generate a script that was used to create the transactions. You may need it to replicate the transactions to another database. To do this, click the Export Script button and then select the Export Redo Script option from the drop-down menu.

Why professionals choose dbForge

dbForge Transaction Log reduces risk and complexity. It replaces undocumented SQL commands with an intuitive interface, safeguards data integrity, and accelerates common tasks like auditing and recovery.

For database administrators, developers, and compliance teams, it transforms the transaction log from a passive record into an actionable asset.

Comparison of SQL Server transaction log viewing methods

| Method | Description | Advantages | Limitations |

|---|---|---|---|

| fn_dblog() | An undocumented system function that queries the active portion of the transaction log. | • Real-time access to transaction data. • Supports filtering by operation types (e.g., INSERT, UPDATE). | • Limited to active logs; cannot read archived log backups. • Returns over 129 columns—requires expert filtering. • Advanced SQL knowledge needed for interpretation. |

| fn_dump_dblog() | Extends fn_dblog() functionality to read from both active logs and transaction log backups. | • Accesses both active and historical logs. • Enables analysis beyond the current log scope. | • Undocumented and unsupported by Microsoft. • Requires up to 63 parameters. • Complex syntax not suited for casual use. |

| DBCC PAGE | An undocumented command that reads raw database pages, including log pages. | • Provides detailed, page-level insights. • Valuable for deep-dive diagnostics. | • Outputs data in hexadecimal format—challenging to interpret. • Requires trace flags. • Not intended for routine log review. |

| Third-Party Tools (e.g., dbForge Transaction Log) | Commercial solutions offering advanced transaction log viewing and management. | • Intuitive graphical interfaces. • Access to both active logs and backups. • Supports auditing, data recovery, and rollback. | • License fees required. • Dependency on third-party vendor support and updates. |

Additional techniques and considerations: transaction log backups

Saving database transaction logs is crucial to ensure that valuable information within them is never lost and guarantees our ability to restore failed SQL Server databases to a working state. Here are some key considerations:

- Begin with a full database backup: Always initiate the backup process with a full database backup. Transaction log backups should complement full SQL Server backups (it’s also advisable to create differential SQL Server database backups). Without a full backup as a starting point, transaction log backups cannot be created.

- Establish a backup schedule: To determine the optimal frequency for backing up the database and transaction logs, analyze your work patterns and database performance. Identify critical recovery metrics, time constraints, and point objectives. These factors will help define an effective backup schedule.

- Follow a recovery sequence: When it comes to database recovery, start by restoring the full database backup, followed by the last differential backup, and finally, restore the transaction log backups to the required point in time. This systematic approach ensures a successful recovery process.

- Safely store backups separately: To prevent potential data loss, store all backups in a secure location separate from your primary database environment. Keeping transaction log backups in the same environment as your resources increases the risk of losing them in case of database damage.

These practices will help you safeguard your database transaction logs effectively and ensure a reliable recovery process in case of failures and other unforeseen issues.

Pro tip: Monitor virtual log file (VLF) counts regularly to avoid fragmentation and performance degradation.

Conclusion

Though database transaction logs are vital for maintaining data consistency and security, extracting and understanding the information stored within these logs can be a complex endeavor. There are methods available for accessing this information, but they are not documented in Microsoft SQL Server, and the results they provide often lack comprehensiveness and user-friendliness.

Fortunately, there are third-party tools such as dbForge Transaction Log for SQL Server that address these challenges. This tool simplifies the process of obtaining essential information, facilitates easy management, and even enables direct data restoration, ultimately saving time and effort. You can thoroughly test the Transaction Log tool’s complete functionality in a real-world scenario, as it offers a fully functional trial for 30 days.

Frequently Asked Questions (FAQ)

How do I enable the transaction log in SQL Server?

You don’t need to manually enable transaction logs in SQL Server. Every database automatically maintains a transaction log as a core part of SQL Server’s write-ahead logging mechanism. However, you can configure log file size, growth, and recovery models through SQL Server Management Studio (SSMS) or T-SQL.

How to check the LSN in SQL Server?

You can view the Log Sequence Number (LSN) using the fn_dblog() function or by querying backup history.

SELECT

TOP 1 backup_finish_date,

first_lsn,

last_lsn,

database_name,

type

FROM msdb.dbo.backupset

WHERE database_name = 'AdventureWorks2022'

AND type = 'L' -- L = Log backup

ORDER BY backup_finish_date DESC; How to check SQL Server logs?

For general error and system logs, use the code below.

EXEC xp_readerrorlog; For transaction logs, use functions like fn_dblog() or third-party tools for a more user-friendly view.

How to check if the transaction log is full in SQL Server?

To determine whether the transaction log is approaching full capacity, you can use the command below.

DBCC SQLPERF(LOGSPACE); This returns the percentage of space used in the transaction log. If the value approaches 100%, the log is nearly full.

Where is the SQL Server history log?

SQL Server maintains a variety of logs:

- Transaction logs: .ldf files located where the database files reside.

- SQL Server Agent job history: Viewable in SSMS under SQL Server Agent → Job History.

- Error logs: Accessible via xp_readerrorlog or SSMS under Management → SQL Server Logs.

How many log files are in SQL Server?

Each database typically has one transaction log file by default, but you can add more if needed. SQL Server treats multiple log files sequentially, not in parallel, so adding log files does not improve performance but may help with disk space management.