In a world where database management keeps evolving, following database design best practices is crucial to ensure that your database application keeps performing efficiently. A well-designed database creates a thoughtful relationship between tables and schemas. It enhances application performance, making it work faster, and scales effortlessly. On the other hand, if your database is poorly designed, the application is prone to slow performance and scalability issues.

Whether you’re a database developer, data analyst, or database administrator, following database design best practices is necessary to build an effective database.

This guide explains the best practices you need to learn to build successful database systems. It also explains why dbForge Edge is the best tool for building an efficient and effortlessly scalable database design.

Let’s get started.

- Why database design matters

- Key principles of database design

- Best practices for database schema design

- Relational database design best practices

- Database table design best practices

- Common database design mistakes to avoid

- How dbForge Edge can help

- Conclusion

Why database design matters

A good database design is the backbone of any successful data-driven project. When done right, it ensures that your system can handle growing data volumes, evolving business requirements, and complex queries without breaking a sweat.

Below are some of the reasons why database design is key.

Optimized data retrieval and storage

Schemas and tables are two core parts of database design. When these two are well-structured and organized, queries run faster, server load decreases, and maintenance is easier.

Prevent data redundancy and inconsistency

Having a well-designed database isn’t optional; it’s essential. Get it right from the start, and your database will serve as a stable, scalable foundation for years to come.

A well-designed database ensures consistent data integrity through thoughtful table relationships, proper normalization, and clear naming conventions.

Key principles of database design

To achieve a solid database design, there are principles to help you ensure that it is efficiently structured, supports current needs, and scales effortlessly in the future. Below are some of these database design principles.

Normalization

Normalization is the process of organizing data to reduce redundancy and improve integrity. It involves breaking large, complex tables into smaller, related ones while maintaining the logical connections between the data. This structured approach makes the database cleaner, more efficient, and easier to manage and update.

Below are the most common normal forms you can use when designing your database:

- 1NF (First Normal Form): Ensures each table column contains atomic (indivisible) values and that each record is unique.

- 2NF (Second Normal Form): Builds on 1NF by removing partial dependencies and ensuring all non-key columns depend entirely on the primary key.

- 3NF (Third Normal Form): Removes transitive dependencies and ensures that non-key columns depend only on the primary key.

- Boyce-Codd Normal Form (BCNF): A stricter version of 3NF, addressing anomalies that can still exist in 3NF structures.

Although normalization boosts data integrity and minimizes redundancy, there are scenarios, especially in analytics or high-read systems, where denormalization can improve performance by reducing complex JOINS. Denormalization is the process of combining tables or duplicating data to intentionally add redundancy into a database. When using either normalization or denormalization, striking the right balance is key.

Consistency and integrity constraints

Consistency and integrity constraints are crucial to building an efficient database system. Integrity constraints enforce rules on the data in your database, while consistency creates reliability. Together, these two ensure your database does not contain any invalid or contradictory information.

Below are some key integrity constraint types you can use when designing your database:

- Primary key: Ensures that each row in your database tables is identified with a unique value, which is the primary key.

- Foreign key: Maintains referential integrity between tables, ensuring relationships remain consistent.

- NOT NULL: Makes sure that columns do not have missing values.

- UNIQUE: Prevents duplicate entries in a column or a set of columns.

- CHECK: Use specified conditions to limit the values that can be entered into a column.

Examples of primary keys and foreign keys across different types of tables

Primary keys

1. Customers table

| CustomerID (PK) | FirstName | LastName | |

|---|---|---|---|

| 1001 | Jane | Doe | [email protected] |

| 1002 | John | Smith | [email protected] |

Primary key: CustomerID

- Ensures each customer is uniquely identifiable even if names or emails are similar.

2. Product table

| ProductID (PK) | ProductName | Price |

|---|---|---|

| P001 | Wireless mouse | 25.00 |

| P002 | Keyboard | 40.00 |

Primary key: ProductID

- Prevents duplication of products and simplifies inventory tracking.

Foreign keys

1. Orders table referencing the customers table

Customers table

| CustomerID (PK) | Name | |

|---|---|---|

| 1001 | Alice Smith | [email protected] |

| 1002 | Bob | [email protected] |

Orders table

| OrderID (PK) | CustomerID (FK) | OrderDate |

|---|---|---|

| 5001 | 1001 | 2025-05-18 |

| 5002 | 1002 | 2025-05-19 |

Foreign key: CustomerID in the Orders table

- Links each order to an existing customer in the Customers table.

- Prevents placing an order for a customer who doesn’t exist.

Scalability considerations

Scalability considerations are another vital principle for designing a solid database system. The quality of your database design is not only measured by how well it serves today’s needs. It is also measured by how well it can accommodate future growth. This is where scalability becomes essential.

To ensure your database scales easily as your data volume increases, here are some techniques you can use when designing your database.

- Indexing: Indexing helps speed up query performance, especially as data volume increases. Smart use of indexes on frequently searched columns reduces query time.

- Partitioning: Partitioning is another technique you can use to improve your database scalability. This technique is used to divide a large table into smaller, more manageable pieces (based on date ranges, IDs, or other criteria), thereby improving performance and maintenance.

- Data sharding: While partitioning works well for large data, data sharding is best for massive systems. It includes splitting data across multiple servers. With this, the data load splits among the different servers, improving access speed.

These scalability techniques help you create systems that are not only fast and efficient but also durable and simple to manage.

Best practices for database schema design

Your database design is incomplete without a strong schema. This is because the database schema defines how data is structured and stored. It is critical in ensuring data integrity, performance, and maintainability.

Also, a good database schema reduces technical debt and simplifies onboarding for new team members. Following best practices for database schema design helps in current development and prepares your system for future growth and evolving business requirements.

Here are some best practices for designing a solid database schema.

Schema naming conventions

One of the most overlooked yet essential best practices for building a solid schema is the use of a consistent naming convention. Thoughtful naming conventions enhance the readability, maintainability, and collaboration on your database. When choosing names for your schema objects, ensure they are descriptive, intuitive, and standardized to improve clarity and reduce the need for extensive documentation. The following tips will help you choose the perfect name for your schema.

- Don’t abbreviate if it is unclear what the abbreviation means. Instead, use full, meaningful names for tables and columns. For example, use

CustomerOrdersinstead ofCustOrd.This improves readability, especially for new team members. - Maintain consistency in naming patterns (e.g., use either singular or plural names across all tables, not a mix).

- Include prefixes or suffixes for clarity where needed (e.g.,

user_id,order_status_code). - Stick to a standard casing format, such as snake_case or camelCase, and maintain the format you choose throughout the schema.

Good naming conventions make schemas self-explanatory and reduce the need for external documentation, especially when working with large or distributed teams.

Modularization for maintainability

As databases grow, they get more challenging to manage. One of the best ways to manage this is to use schema modularization.

Schema modularization involves breaking your schema into logical, functional modules or domains. To do this, you’ll group related tables into separate schemas or logical areas. For example, you might have Sales, Inventory, and UserManagement schemas within the same database.

Using schema-level or modular design separation helps you organize data based on business functions or microservices. This approach allows teams to work independently on specific sections of the schema without impacting unrelated components. It makes your database more maintainable, easier to scale, and less error-prone. It also allows you to apply security and permissions by module and supports role-based access control more effectively.

With a modular structure, your database evolves as your business does.

Below are two tables showing a non-optimized database schema design and an optimal one that follows the best practices of a well-designed database schema.

Non-optimized database schema design

| Table name | Columns | Issues |

|---|---|---|

| Customer_Info | CustID, Name, Email, Phone, Address, OrderID, OrderDate, ProductName | Redundant data (customer + order + product in one table) No normalization Mixed responsibilities |

| Orders2023 | OID, CID, PName, Qty, Date | Inconsistent naming (OID, CID, PName) Year-specific table name (scalability issue) |

| Product_table | PID, ProdName, Cust_ID, QtySold | Redundant customer link Does not follow normalization rules (product table storing sales/customer info) |

| Invoices_List | InvoiceID, CustName, Amount, Date | No foreign key to Customer_Info Stores the customer’s name instead of ID |

| Shipping | ShipID, OrderId, Customer, ShipDate, Address | Inconsistent customer reference Redundant address info No normalization |

Optimal database schema design

| Table name | Columns | Best practices applied |

|---|---|---|

| Customers | CustomerID (PK), Name, Email, Phone, Address | Stores customer details only (normalized) Clear naming Primary Key (PK) defined |

| Products | ProductID (PK), ProductName | Stores product info independently Avoids mixing sales or customer info |

| Orders | OrderID (PK), CustomerID (FK), OrderDate | Links orders to customers Foreign Key (FK) ensures referential integrity |

| OrderDetails | OrderDetailID (PK), OrderID (FK), ProductID (FK), Quantity | Supports the relationship between orders and products Fully normalized |

| Invoices | InvoiceID (PK), OrderID (FK), Amount, InvoiceDate | Linked to orders via FK Avoids customer name duplication |

| ShippingDetails | ShippingID (PK), OrderID (FK), ShippingDate, ShippingAddress | Linked properly via OrderID Eliminates redundant customer/address info |

Key improvements

| Issues in the non-optimized database schema design | Improvements in the optimal database schema design |

|---|---|

| Customer and order data are stored in the same table | Customers and Orders tables are separated using foreign keys |

| Inconsistent naming (CustID, CID, Customer) | Unified, clear naming conventions (CustomerID, OrderID, etc.) |

| Year-specific tables (Orders2023) | General-purpose Orders table allows scalability and flexibility |

| Redundant product/customer info in multiple tables | Modular design with OrderDetails |

| Missing foreign keys | All relationships are defined with foreign keys, ensuring referential integrity |

| Mixed responsibilities in one table | Tables have single, focused responsibilities, supporting maintenance and performance |

Relational database design best practices

So far, you have learned some key principles for designing a solid database and best practices for database schemas. However, relational database design is another key area to consider when building your database system.

It takes more than just linking tables together to design an efficient relational database. Designing a relational database is about creating a well-structured, efficient, and scalable system that accurately reflects real-world relationships. When this is done right, your data becomes easy to access, update, and maintain.

Below are some best practices for designing a solid relational database.

Primary and foreign keys

At the heart of every relational database are primary and foreign keys, which define how tables relate to one another.

A primary key is a unique identifier for each record in a table. It ensures that no two rows are exactly the same and provides a stable reference for establishing relationships with other tables. When choosing a primary key for your record, always choose one that is immutable and consistently unique. Integers that auto-increment are a common choice.

A foreign key is used to link one table to another by referencing the primary key of the related table. This enforces referential integrity and ensures that a record in one table cannot reference a nonexistent entry in another.

Using primary and foreign keys appropriately helps you build strong and traceable connections between data entities. It also helps to ensure that complex systems are accurate and consistent.

Indexing for faster queries

Indexes are also one of the most effective tools for improving query performance and building a solid relational database. Here are a few ways to ensure you are indexing columns correctly:

- Create indexes on columns that are frequently used in

WHEREclauses,JOINs, orORDER BYoperations. This allows the database engine to find data more quickly and reduce the need for full table scans. - Use composite indexes when queries involve multiple columns, but be sure the column order matches the most common queries.

- Avoid excessive indexing, especially on columns with low selectivity (e.g., columns that only have a few distinct values like “Yes”/”No”). Indexes take up storage and slow down

INSERT,UPDATE, andDELETEoperations. - Regularly monitor and tune indexes based on query performance and access patterns to ensure your indexing strategy stays efficient.

Choosing data types

Another important best practice for building a strong relational database is selecting the right data types for each column in your table. Thoughtful data type selection reduces memory usage, improves query speed, and enforces data accuracy. When choosing your data type, consider the following:

- Use the smallest data type that adequately stores the values. For example, if a column doesn’t require unlimited length, prefer

INToverBIGINTwhen possible, orVARCHAR(100)instead ofTEXT. - Avoid generic or oversized types like

TEXT,BLOB, or unboundedVARCHARunless necessary, as they can impact performance and indexing. - Match data types closely to the nature of the data. Use

DATEorDATETIMEfor dates,BOOLEANfor true/false flags, and enumerations or constraints for fixed-value fields.

Combining strong key structures, smart indexing, and proper data types lays the foundation for a relational database that performs well, scales gracefully, and supports clean, consistent data.

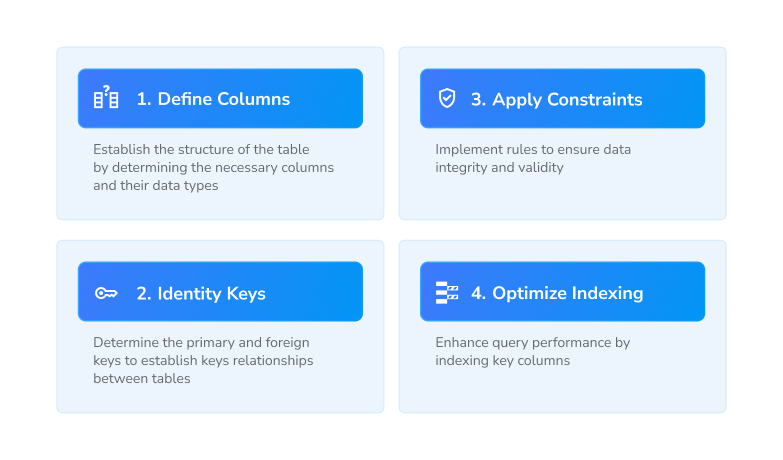

Database table design best practices

In database design, tables are where your data lives, and their structure directly impacts performance, maintainability, and flexibility. Designing efficient tables means thinking not just about what your data looks like today, but how it might evolve in the future. The following best practices help you design the right database table for your application.

Data normalization in tables

Data normalization includes organizing data into multiple related tables to reduce redundancy and improve integrity. It usually involves applying the first few normal forms (1NF, 2NF, 3NF), which help eliminate anomalies during insertions, deletions, and updates.

Breaking large, flat tables into smaller, logically related ones ensures that each piece of data is stored only once. This reduces data duplication and makes updates more efficient and consistent.

However, while this approach improves data integrity and simplifies maintenance, over-normalization can hurt performance, especially in cases where you’re doing this with complex JOINS. In high-read environments, limited denormalization can be beneficial but should be carefully balanced.

Managing table size

As your application grows, so does the data. Designing your database tables to handle large volumes of data is essential for long-term scalability and performance. Here are a few things to keep in mind while doing this:

- Avoid using wide tables with hundreds of columns unless absolutely necessary. Keep tables focused on specific entities or functions.

- Implement partitioning, which includes dividing a large table into smaller, more manageable pieces based on criteria like date, region, or ID. This approach improves query performance and simplifies maintenance.

- Use archiving strategies to move historical or infrequently accessed data into separate tables or databases. This keeps your primary tables lean and responsive.

- Designing with growth in mind ensures your database performs efficiently even under heavy loads.

Enforcing data integrity with constraints

Data quality is non-negotiable in any serious application. The easiest way to improve the quality of your data is by enforcing data integrity with constraints. Constraints serve as built-in rules that ensure only valid, accurate, and consistent data is stored in your tables. Here are some constraints to use when designing your database table:

- NOT NULL: to ensure that required fields are never left empty.

- UNIQUE constraints: to prevent duplicate values in columns that must remain distinct, like email addresses or usernames.

- CHECK constraints: to enforce custom rules (e.g., salary must be greater than zero, age must be over 18).

Common database design mistakes to avoid

Having a solid database design is not optional. It is crucial. However, achieving this can be challenging and prone to errors if not approached expertly.

Whether you’re a beginner or a seasoned developer, understanding the following common database design mistakes and how to avoid them can save you hours of frustration and pave the way for a scalable, maintainable, and high-performing system.

Skipping normalization

Normalization involves organizing your data into separate tables to eliminate redundancy and ensure logical data dependencies. When you skip this, the following might happen:

- Data gets duplicated across tables, increasing the risk of inconsistencies and update anomalies.

- As data grows, managing redundant information becomes time-consuming and error-prone.

- Reports and queries become unreliable because there’s no single source of truth.

Best practices

- Normalize step by step when building your database schema and only denormalize if necessary for performance.

- Use at least 3NF (Third Normal Form) at the initial stage of your database design. This will help address common duplication problems.

- Use entity-relationship (ER) diagrams to see the relationships among your tables and identify areas that require normalization quickly.

Overuse of JOINS

Using JOINS is essential when building relational databases, but overusing them, especially in complex queries, can significantly degrade performance and trigger the following:

JOINSacross multiple large tables increase query complexity and response time.- Poorly indexed foreign keys or mismatched data types can further slow things down.

- Over-reliance on

JOINScan make the schema harder to understand and maintain.

Best practices

- Use joins wisely and ensure that indexes support them efficiently.

- Simplify data retrieval by caching frequently accessed reports or aggregating data in summary tables.

- Denormalize selectively if it helps reduce unnecessary joins in critical queries.

Failing to plan for scalability

Designing databases for current needs without considering future data growth is one common mistake among developers. This approach can lead to serious scalability issues as the application gains users and stores more data.

Here are some issues that may occur when you fail to plan for scalability:

- Tables balloon in size, leading to slower queries and higher storage costs.

- Performance bottlenecks appear because indexes weren’t designed to handle large volumes.

- Maintenance becomes a nightmare due to a lack of partitioning or archiving strategies.

Best practices

- Choose appropriate data types and avoid oversized columns.

- Index strategically, especially for columns used in filtering, sorting, and joining.

- Plan for horizontal scalability with partitioning, sharding, or even distributed database architectures when necessary.

How dbForge Edge can help

No doubt, the quality of your database design directly impacts the performance of your application. However, building a solid database design can be challenging and time-consuming. This is where dbForge Edge comes in.

dbForge Edge is an all-in-one database powerhouse designed to help you follow database design best practices with confidence, precision, and ease.

Whether you’re creating a new database or optimizing an existing one, dbForge Edge provides all the tools you need to work more efficiently and quickly.

Here’s how dbForge Edge helps streamline your database design process.

Visual schema designer

dbForge Edge is built with an intuitive visual schema designer that lets you create and manage tables, relationships, constraints, and keys visually with an intuitive drag-and-drop interface. No need to struggle with complex SQL code manually. As you build your schema with this feature, you can easily spot design issues instantly and maintain a clear overview of your structure.

Visual query builder

The dbForge Edge query builder is another excellent tool for creating a solid, efficient, and scalable database design. It simplifies the process of creating complex queries with drag-and-drop functionality. With this, you can build complex SQL queries without writing a single line of code. All you have to do is drag and drop tables, set conditions, and preview results in real time. No more spending time writing constraints and code for your database design.

Performance analyzer

Use the dbForge Edge performance analyzer feature to identify bottlenecks, optimize indexes, and fine-tune your database design for peak efficiency. With this feature, you can optimize your database for speed and scalability, detect slow queries, analyze execution plans, and fine-tune indexing. Also, the performance analyzer lets you identify and remove performance bottlenecks before they become production problems.

dbForge Edge allows you to easily handle all aspects of building a solid database design. You can easily perform schema comparison and synchronization, data generation, and test data creation all in one platform.

What more?

dbForge Edge supports multiple database systems, including MySQL, SQL Server, Oracle, and PostgreSQL

Download the free trial of dbForge Edge today and experience the difference a professional-grade tool can make.

Conclusion

A well-designed database is the foundation of every successful data-driven application. From normalization and integrity constraints to indexing and scalability planning, each principle plays a vital role in ensuring long-term performance and maintainability.

By avoiding common pitfalls and embracing best practices, you set your systems up for success today and in the future. And with a powerful tool like dbForge Edge, you can streamline your entire database design workflow, avoid costly mistakes, and bring your data architecture to the next level.

Start building solid databases today. Try dbForge Edge and design with confidence.

FAQ

How do database table design best practices improve database performance and data integrity?

Database design best practices like normalization, proper indexing, and enforcing primary and foreign keys reduce data redundancy, prevent inconsistencies, and enhance query efficiency. A well-structured schema minimizes maintenance issues and ensures the database scales effectively with growing data.

What are the key database design principles that every developer should follow?

Every developer should know key database design principles like normalization (up to at least 3NF), consistent naming conventions, using appropriate data types, enforcing constraints (PK, FK, NOT NULL), indexing strategically, and documenting schema decisions. These practices lay a solid foundation for performance, integrity, and scalability.

How can I implement relational database best practices to ensure efficient queries and indexing?

Start with a normalized schema, define relationships using foreign keys, and create indexes on frequently queried columns. Analyze query patterns to identify performance bottlenecks and avoid over-indexing, which can slow down write operations.

What are some of the best practices for database schema design to improve long-term maintainability?

Use clear and consistent naming conventions, separate concerns with normalized tables, define all constraints, and document your design choices. Also, avoid hardcoding logic into the schema, and regularly review and refactor your schema as requirements evolve.

Can dbForge Edge help me optimize database table design best practices for high-performance queries?

Yes. dbForge Edge offers tools like Query Profiler, Execution Plan Analyzer, and Index Manager to help identify slow queries, recommend indexes, and analyze performance bottlenecks.

Can dbForge Edge help me check if my database design follows the best practices for relational databases?

Absolutely. With features like the Database Diagram, Database Designer, and Schema Comparison, dbForge Edge helps you visualize relationships, spot design flaws, and align your schema with relational best practices.

How can dbForge Edge improve my database schema design best practices with its visual tools?

dbForge Edge provides drag-and-drop database modeling, visual ER diagrams, and schema synchronization features that simplify complex design tasks. These tools help enforce normalization, clarify relationships, and ensure your schema aligns with best practices.

How can I use dbForge Edge to implement database table design best practices for large-scale projects?

For large projects, dbForge Edge offers version control integration, schema change tracking, and project-based organization to manage complex schemas.